Cheat Sheet: Model Development

Cheat Sheet: Model Development

| Process | Description | Code Example |

|---|---|---|

| Linear Regression | Create a Linear Regression model object |

|

| Train Linear Regression model | Train the Linear Regression model on decided data, separating Input and Output attributes. When there is single attribute in input, then it is simple linear regression. When there are multiple attributes, it is multiple linear regression. |

|

| Generate output predictions | Predict the output for a set of Input attribute values. |

|

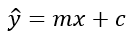

| Identify the coefficient and intercept | Identify the slope coefficient and intercept values of the linear regression model defined by  Where m is the slope coefficient and c is the intercept. Where m is the slope coefficient and c is the intercept. |

|

| Residual Plot | This function will regress y on x (possibly as a robust or polynomial regression) and then draw a scatterplot of the residuals. |

|

| Distribution Plot | This function can be used to plot the distribution of data w.r.t. a given attribute. |

|

| Polynomial Regression | Available under the numpy package, for single variable feature creation and model fitting. |

|

| Multi-variate Polynomial Regression | Generate a new feature matrix consisting of all polynomial combinations of the features with the degree less than or equal to the specified degree. |

|

| Pipeline | Data Pipelines simplify the steps of processing the data. We create the pipeline by creating a list of tuples including the name of the model or estimator and its corresponding constructor. |

|

| R^2 value | R^2, also known as the coefficient of determination, is a measure to indicate how close the data is to the fitted regression line. The value of the R-squared is the percentage of variation of the response variable (y) that is explained by a linear model. a. For Linear Regression (single or multi attribute) b. For Polynomial regression (single or multi attribute) | a.

b.

|

| MSE value | The Mean Squared Error measures the average of the squares of errors, that is, the difference between actual value and the estimated value. |

|

Comments

Post a Comment